GRPO with Verifiable (Binary) Rewards Is an Adaptive Weighted Contrastive Loss

1. Grouped Reward Policy Optimization

The goal of this short blog is to understand GRPO that was used successfully to train Deepseek models. We will limit our analysis to binary rewards or what Tulu authors calls RLVR (Reinforcement learning with Verifiable Rewards.)

Understanding GRPO with Rule Based (Binary) Reward

GRPO has been successfully used in DeepSeek (v3,math, and R1) especially with rule based rewards (verifiable rewards, i.e binary rewards), the goal of this note is to understand it. GRPO optimizes the following objective:

Where the advantage function

where

And

Note that in our context

and if

We will assume that we have a rule based reward that evaluates correctness of a reasoning or the execution of the code meaning that

which simplifies to :

Hence we have:

and hence the overall cost is obtained by taking expectation over

full derivation available here

Loss Interpretation

We see that GRPO is effectively a weighted contrastive loss that is weighted by a ratio depending on the probability of success of the old policy (p):

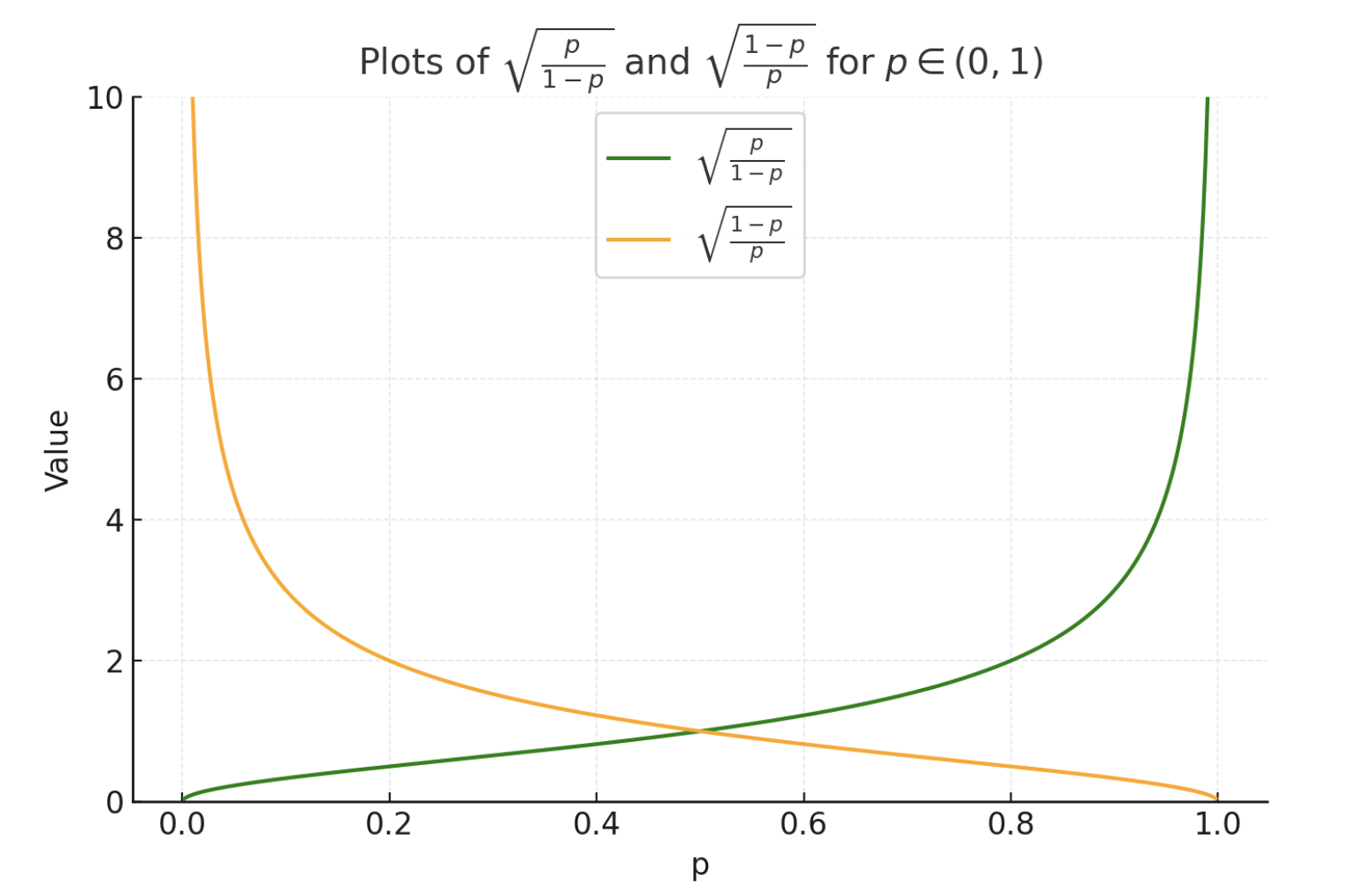

We see from the weights plots that :

- if the success probability of old policy is high (say p >0.5), the weighting for points with success is low since the old policy is already good, and for failing point the weight is high and hence they are more penalized

- If the success probability of old policy is low (say p <0.5), the weighting for points with success is high since we want to reinforce those successes, and for failing points these are still penalized but with a small weight

More observations due to clipping:

- for correct outputs the cost is constant

- for wrong outputs the cost is

2 Conclusion

In summary, the standardized reward or the advantage function used in GRPO results in an interesting adaptive weighted contrastive loss : if the probability of success of the old policy is high, the wrong answers are more penalized than the correct ones are reinforced. If the probability of success of old policy is low , the correct answers are more reinforced than the wrong answers are penalized.

References

- Guo, Daya, et al. “Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning.” arXiv preprint arXiv:2501.12948 (2025).

- Shao, Zhihong, et al. “Deepseekmath: Pushing the limits of mathematical reasoning in open language models.” arXiv preprint arXiv:2402.03300 (2024).

- Lambert, Nathan, et al. “TULU 3: Pushing Frontiers in Open Language Model Post-Training.” arXiv preprint arXiv:2411.15124 (2024).