GRPO Amplifies the Success Rate of A Policy via An implicit Fixed Point Iteration

In the previous post, we established that GRPO with verifiable rewards can be seen as a weighted contastive policy optimization, where positive and negative samples are synthetic data sampled from the old policy and labeled via the verifiable reward. In this post we will review our recent preprint that builds on this observation and analyses the dynamics of GRPO and the probability of success of the verifiable reward under GRPO’s optimized policy.

1 GRPO’s Success Rate Recursion

GRPO with no Clipping Equivalent Contrastive Loss formulation

For

Let

We can write GRPO with no clipping as the following contrastive optimization:

where

GRPO Iterations and Optimal Policy

We drop now the optimization on the parameter space and optimize on the space of policies hence GRPO iterations can be written follows for

and

The optimal policy for

where

GRPO’s Probability of Success Recursion

Computing now the success rate

where

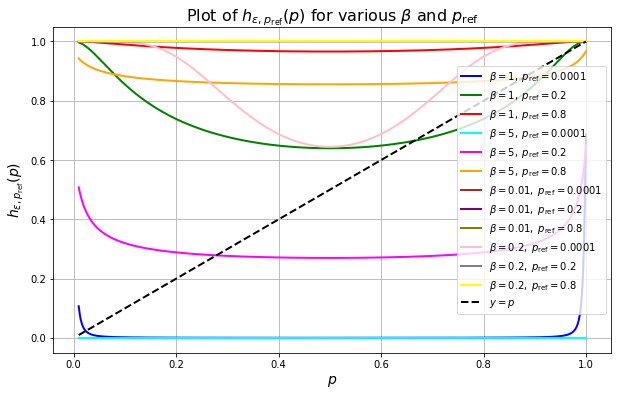

2 GRPO Amplifies Success Rate via An Implicit Fixed Point Iteration

We see that the success rate satisfies a fixed point iterations and the limit of this recursion as

- the sequence

- the fixed point success rate

3 Conclusion

In summary, GRPO with verifiable rewards iterations leads to a fixed point iteration on the success rate of the policy. Under mild conditions, the limit success rate is guaranteed to amplify the success rate of the reference model and the local convergence of the iterates to the limit point is also guaranteed.